RUGD Dataset Overview

The RUGD dataset focuses on semantic understanding of unstructured outdoor environments for applications in off-road autonomous navigation. The datset is comprised of video sequences captured from the camera onboard a mobile robot platform. The overall goal of the data collection is to provide a more representative dataset of environments that lack structural cues that are commonly found in urban city autonmous navigation datasets. The platform used for data collection is small enough to manuever in cluttered environments, and is rugged enough to traverse through challenging terrain to explore more unstructured areas of an environment.

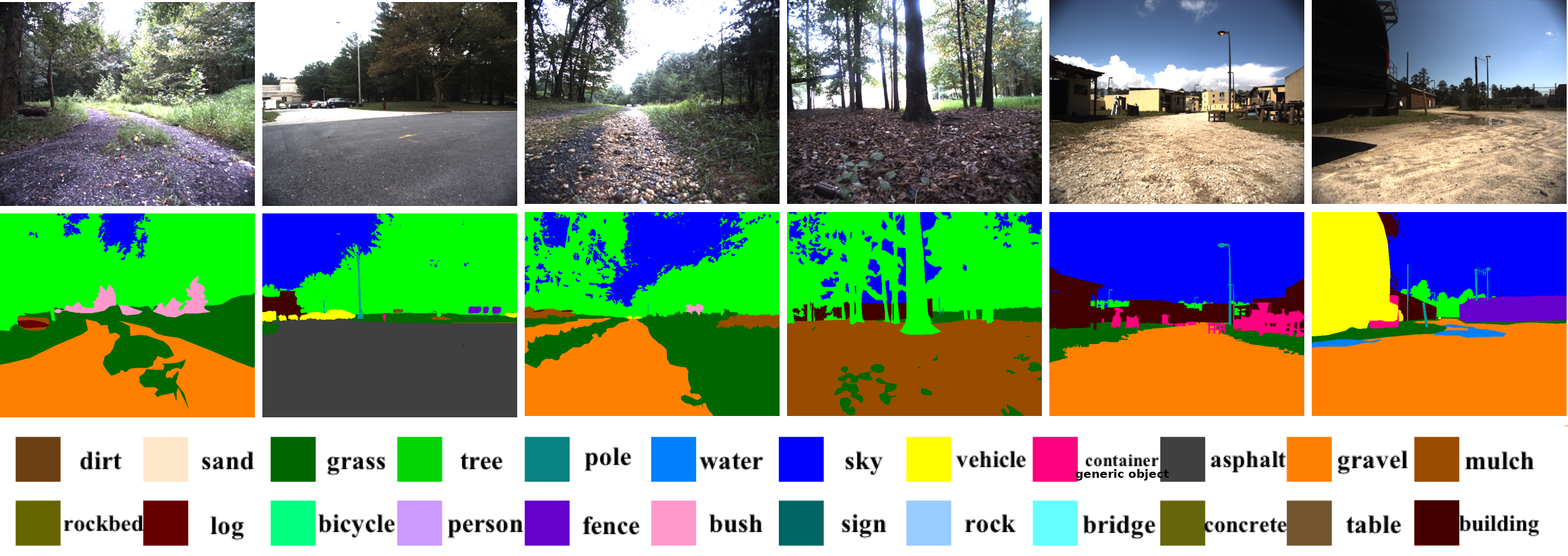

Dense pixel-wise annotations are provided for every fifth frame in a video sequence. The ontology is defined to support fine-grained terrain identification for path planning tasks, and object identification to avoid obstacles and localize landmarks. In total, 24 semantic categories can be found in the annotations of the videos including eight unique terrain types.